- AI News

Latest AI News

Featured AI News

Get Weekly AI News!

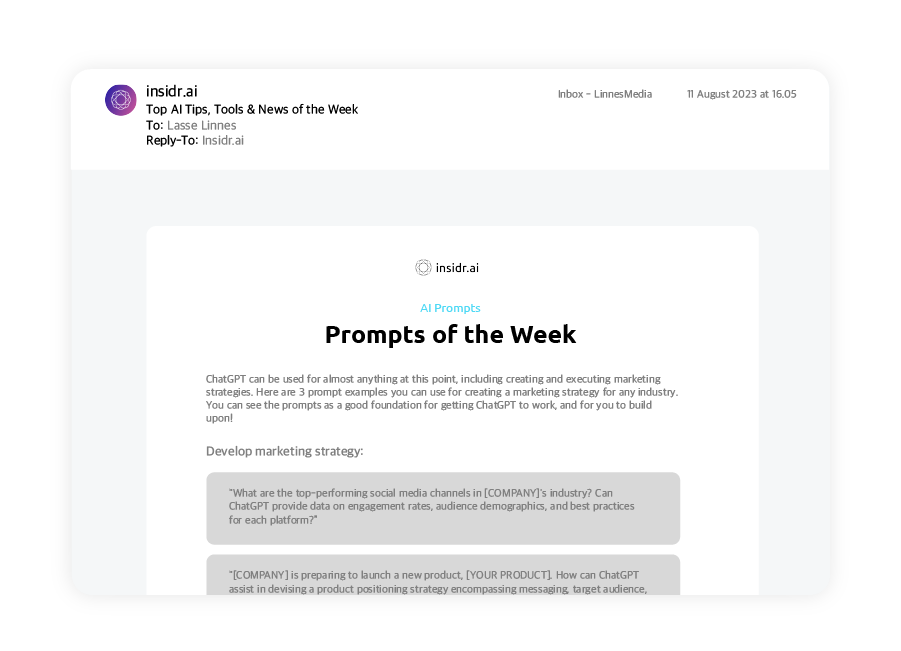

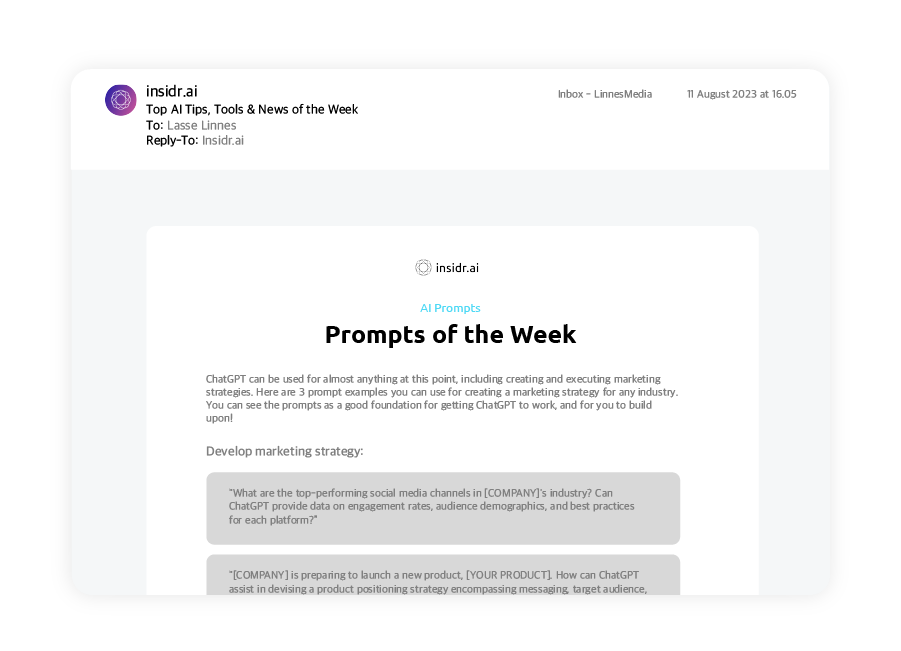

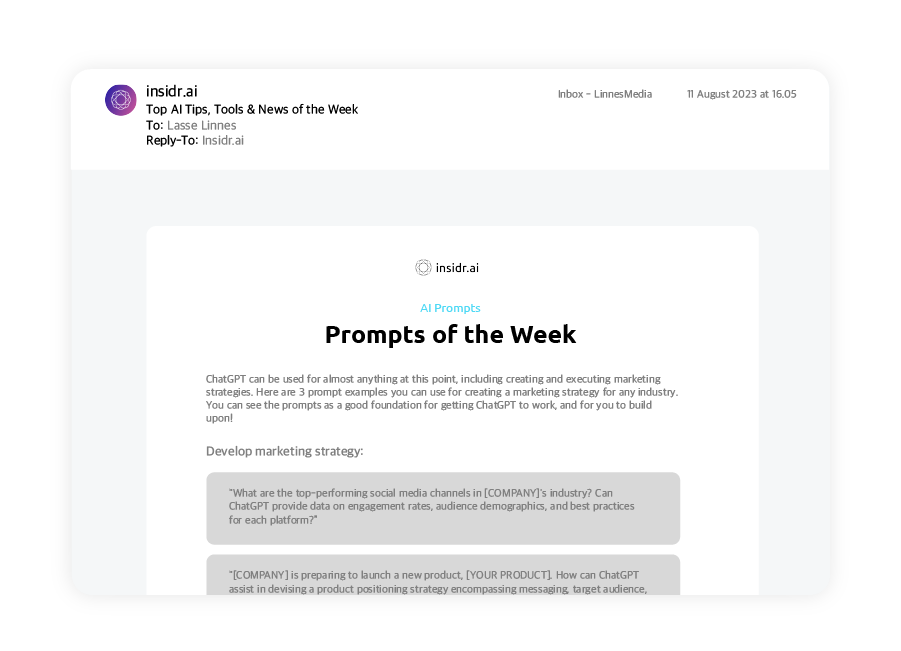

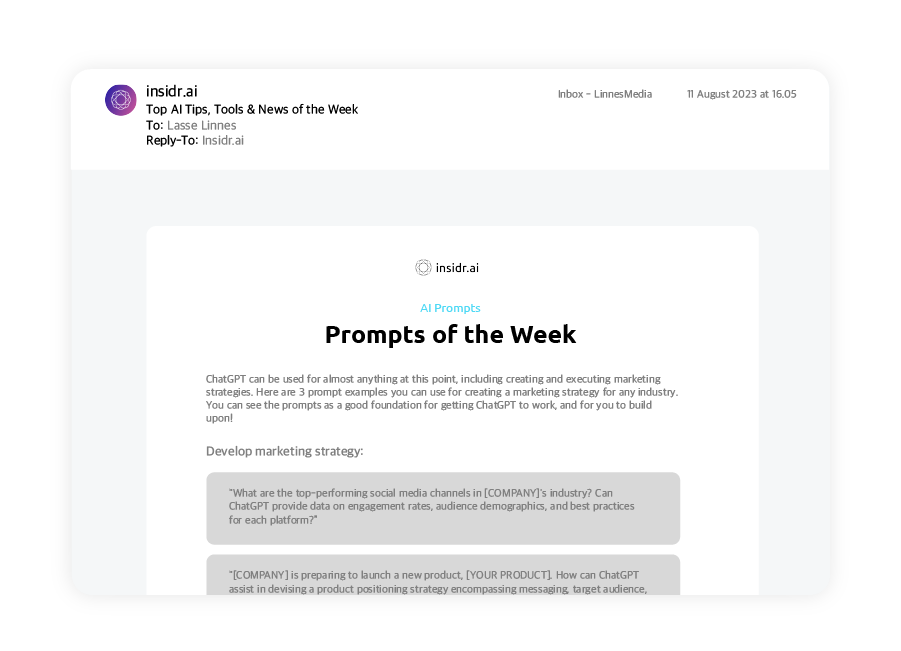

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

- AI Academy

- ChatGPT Tutorial: How to use ChatGPT for Beginners

- How to Start an AI Automation Agency: A Guide for Beginners

- How To Making Money with AI

- How to Build Chatbots

- How to Write a Prompt: Best ChatGPT Prompts

- Make money with ChatGPT

- 55+ Profitable AI Business Ideas

- How to use AI for Marketing

- Create a Profitable Blog with AI

- How to Jailbreaking ChatGPT

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

- AI Tools

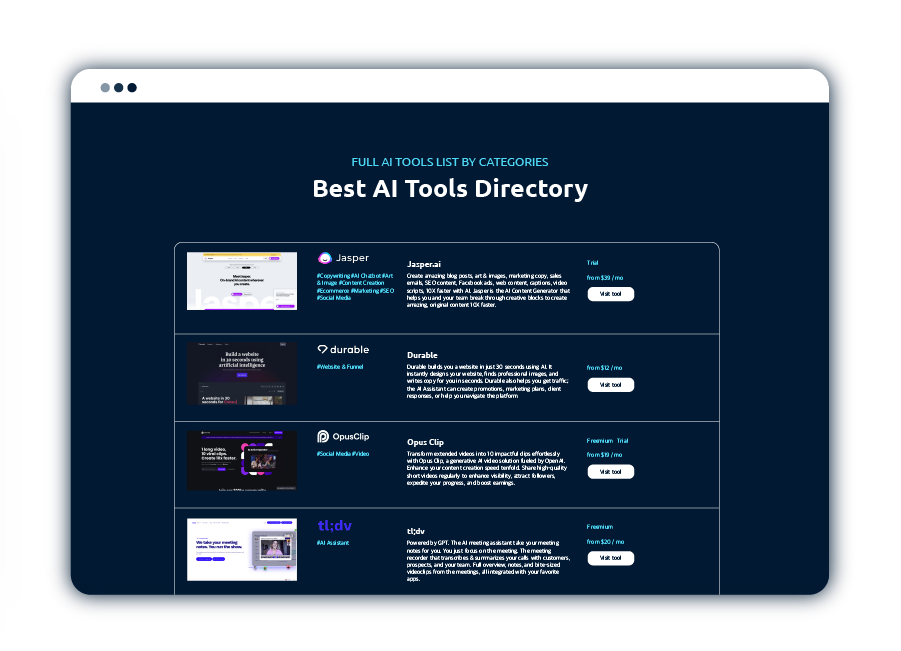

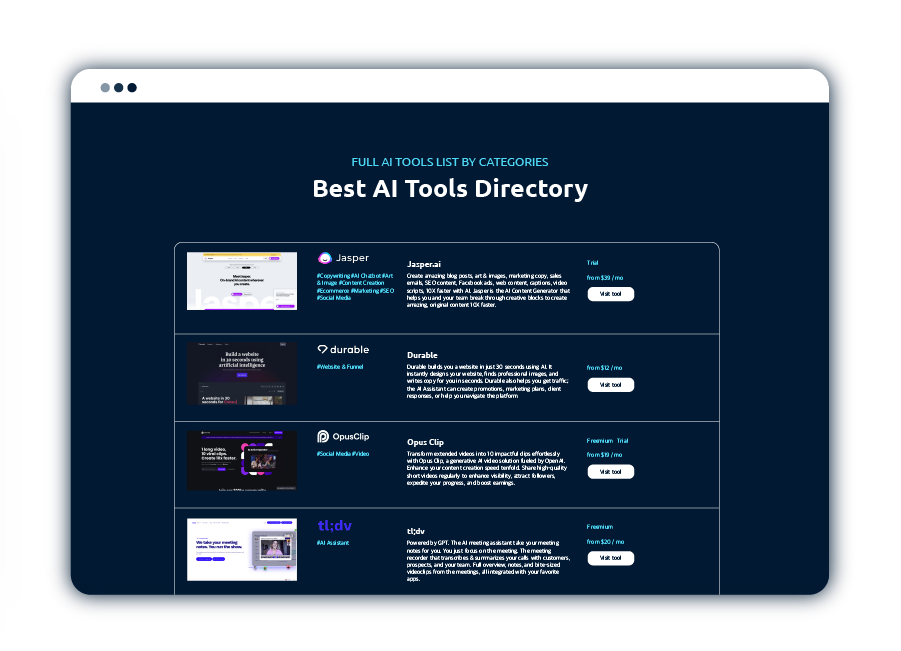

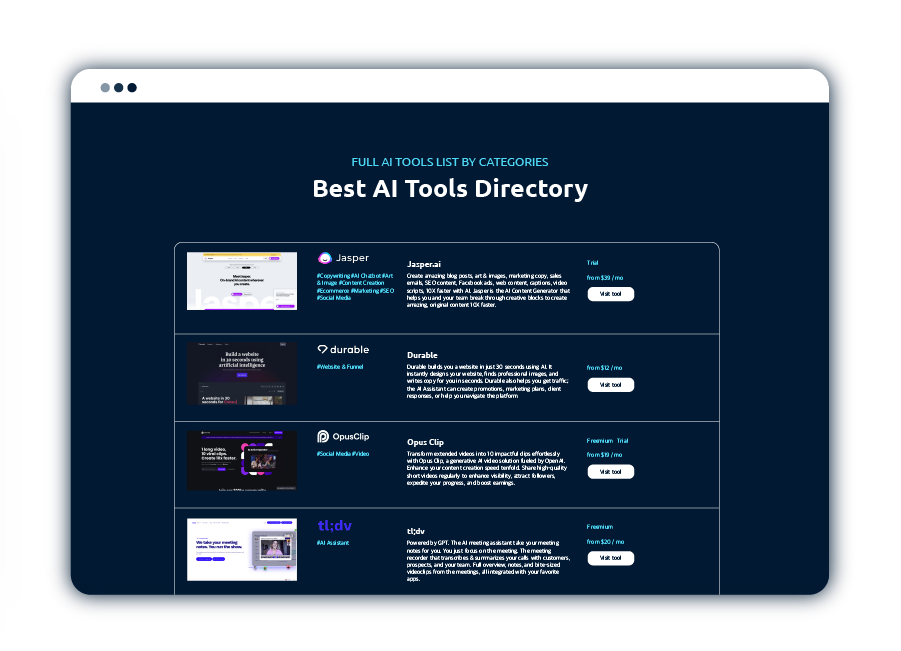

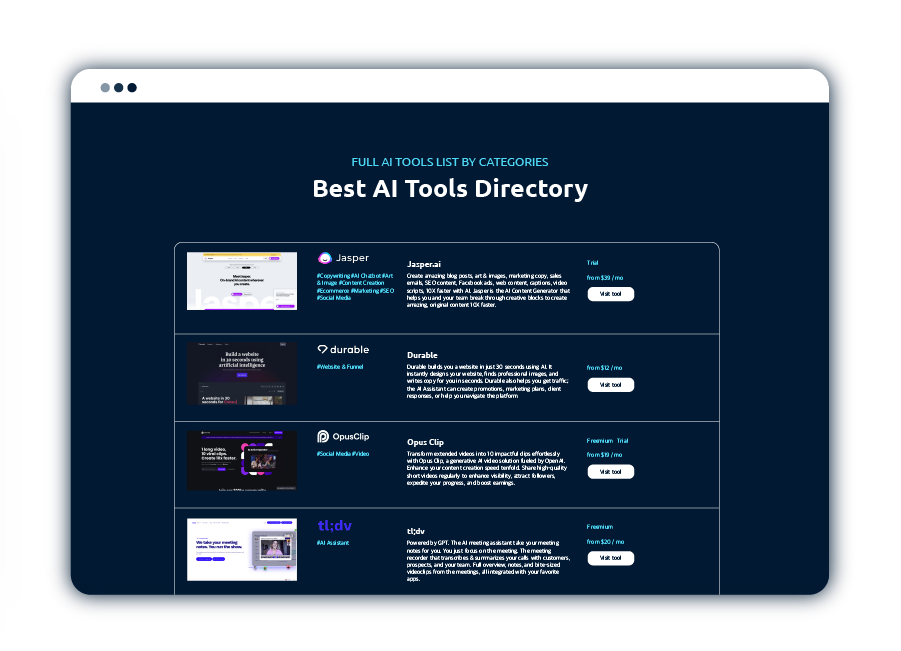

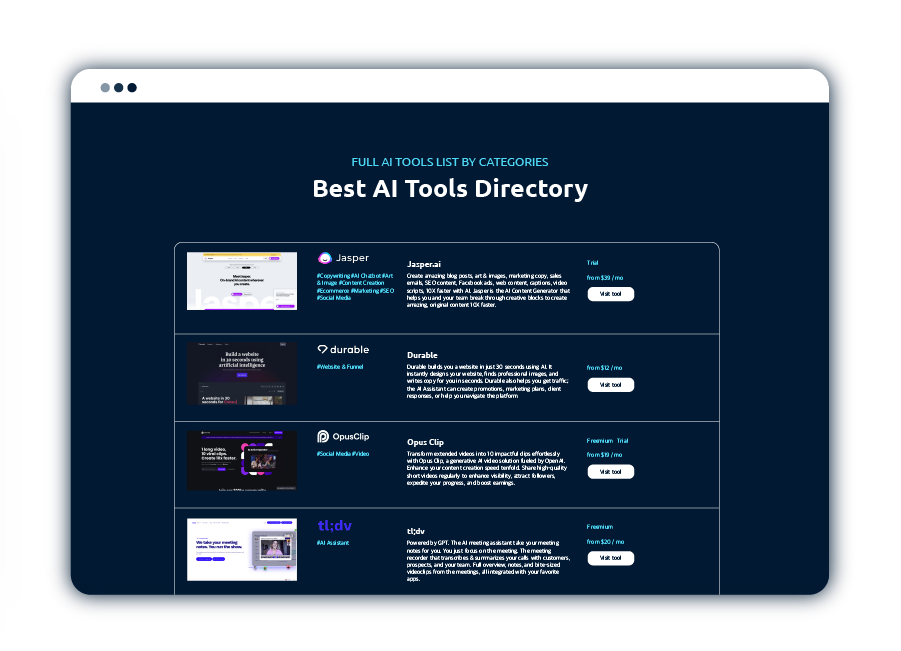

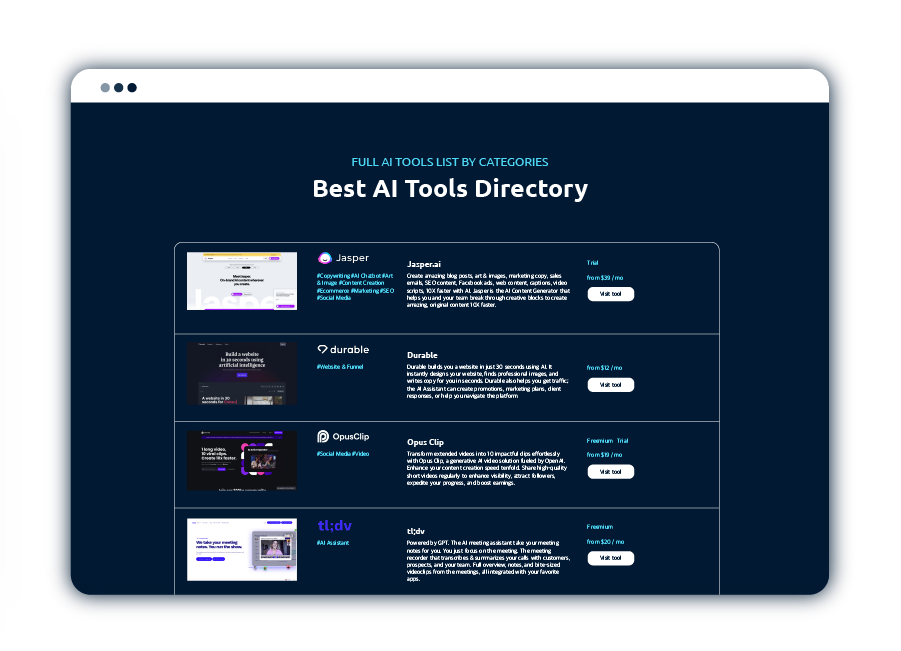

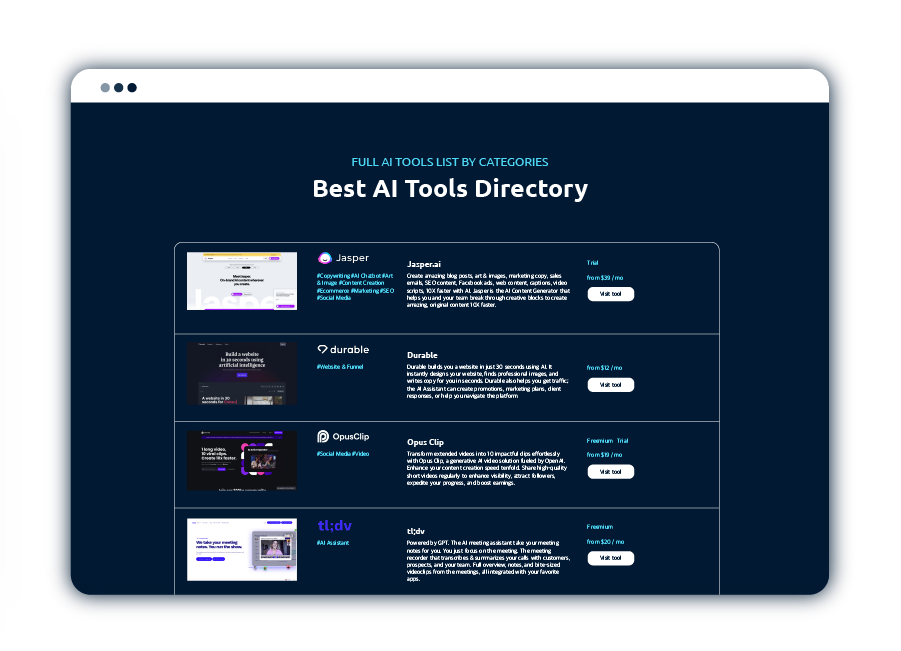

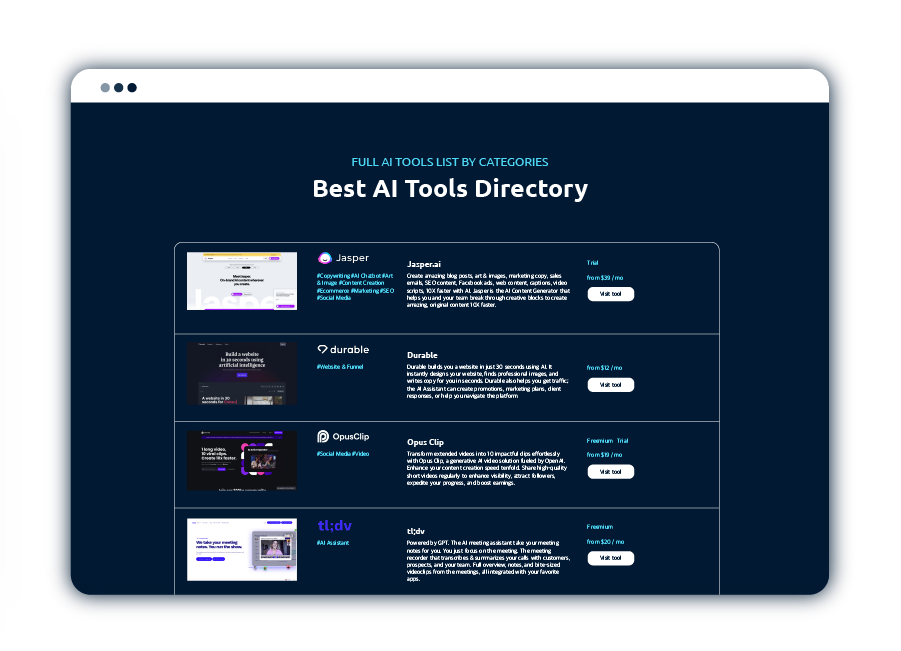

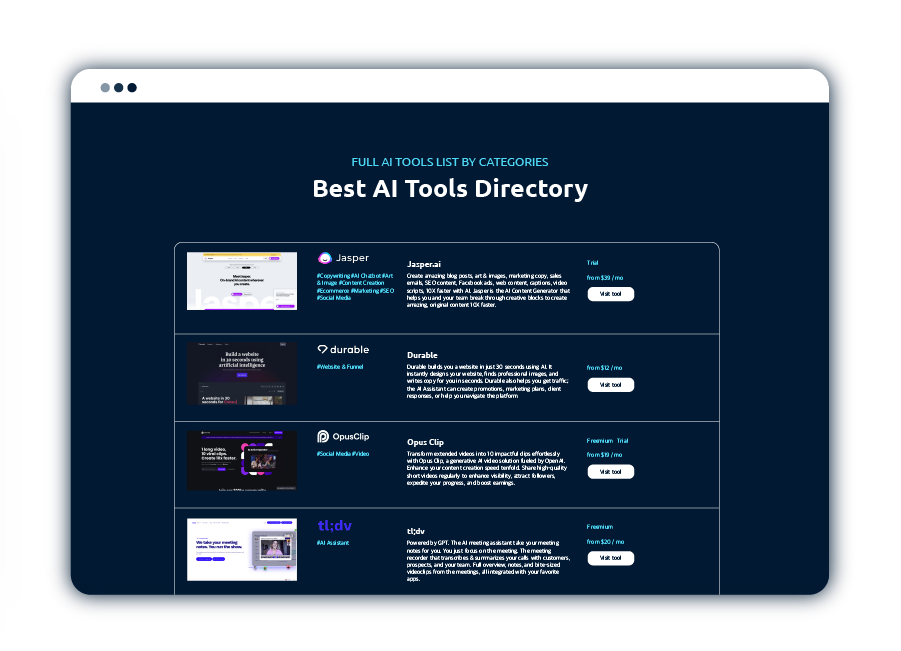

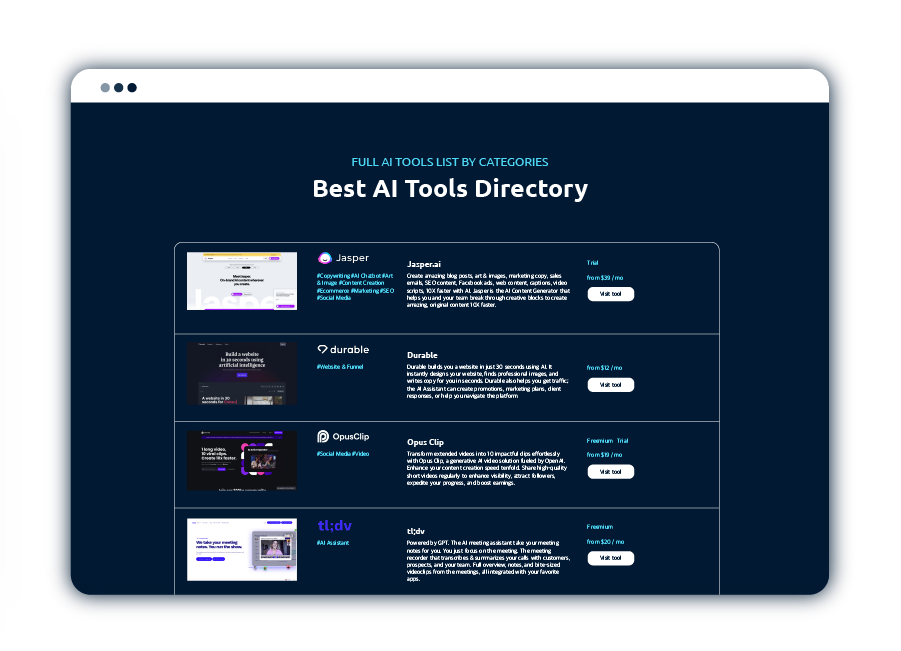

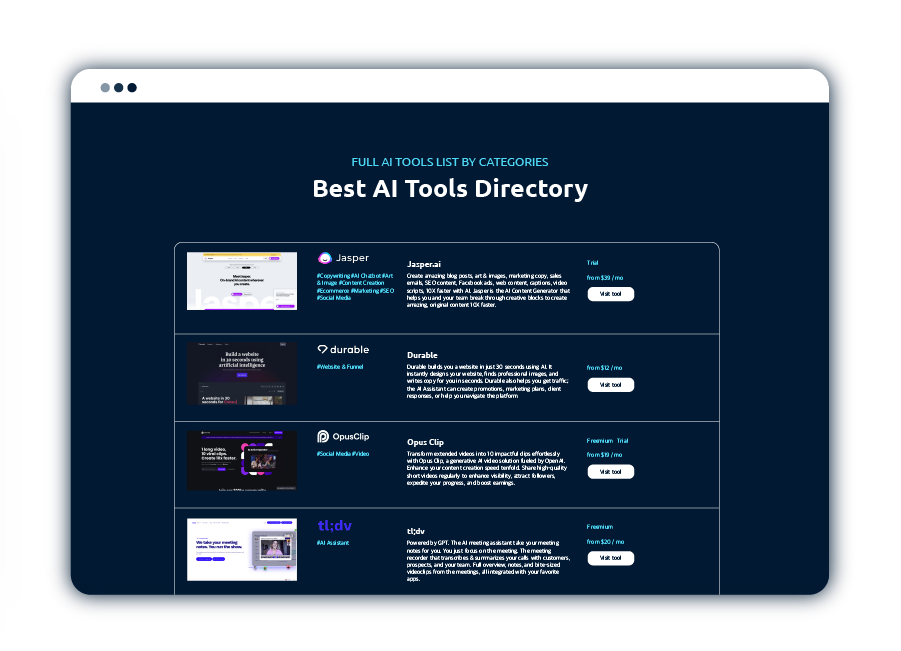

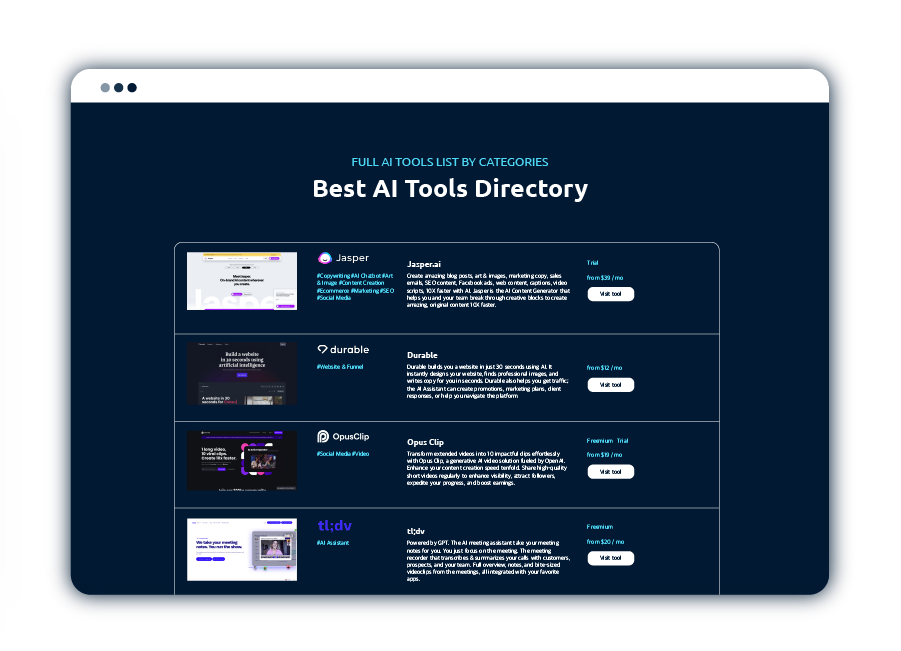

- AI Tools Directory

- AI For Business

- Marketing

- Social Media

- Content Creation

- Copywriting

- SEO

- Video

- Image & Art

- Email Writer & Assistant

- Ecommerce

- CRM & Automation

- AI Crypto Trading Bots

- AI Stock Trading Bots

- Website & Funnel

- Customer Service

- Free Tools

- AI Chatbots

- ChatGPT plugins

- Tool Reviews

Get 300+ Best AI Tools Now!

We'll send you a free AI Tool List with 300+ of the best AI Tools available. And we continue to update it every week!

Get 300+ Best AI Tools Now!

We'll send you a free AI Tool List with 300+ of the best AI Tools available. And we continue to update it every week!

AI Tool Categories

Get 300+ Best AI Tools Now!

We'll send you a free AI Tool List with 300+ of the best AI Tools available. And we continue to update it every week!

- AI Solutions

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

- Contact

- AI News

Latest AI News

Featured AI News

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

- AI Academy

- ChatGPT Tutorial: How to use ChatGPT for Beginners

- How to Start an AI Automation Agency: A Guide for Beginners

- How To Making Money with AI

- How to Build Chatbots

- How to Write a Prompt: Best ChatGPT Prompts

- Make money with ChatGPT

- 55+ Profitable AI Business Ideas

- How to use AI for Marketing

- Create a Profitable Blog with AI

- How to Jailbreaking ChatGPT

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

- AI Tools

- AI Tools Directory

- AI For Business

- Marketing

- Social Media

- Content Creation

- Copywriting

- SEO

- Video

- Image & Art

- Email Writer & Assistant

- Ecommerce

- CRM & Automation

- AI Crypto Trading Bots

- AI Stock Trading Bots

- Website & Funnel

- Customer Service

- Free Tools

- AI Chatbots

- ChatGPT plugins

- Tool Reviews

Get 300+ Best AI Tools Now!

We'll send you a free AI Tool List with 300+ of the best AI Tools available. And we continue to update it every week!

Get 300+ Best AI Tools Now!

We'll send you a free AI Tool List with 300+ of the best AI Tools available. And we continue to update it every week!

AI Tool Categories

Get 300+ Best AI Tools Now!

We'll send you a free AI Tool List with 300+ of the best AI Tools available. And we continue to update it every week!

- AI Solutions

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

- Contact

- AI News

Latest AI News

Featured AI News

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

- AI Academy

- ChatGPT Tutorial: How to use ChatGPT for Beginners

- How to Start an AI Automation Agency: A Guide for Beginners

- How To Making Money with AI

- How to Build Chatbots

- How to Write a Prompt: Best ChatGPT Prompts

- Make money with ChatGPT

- 55+ Profitable AI Business Ideas

- How to use AI for Marketing

- Create a Profitable Blog with AI

- How to Jailbreaking ChatGPT

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

- AI Tools

- AI Tools Directory

- AI For Business

- Marketing

- Social Media

- Content Creation

- Copywriting

- SEO

- Video

- Image & Art

- Email Writer & Assistant

- Ecommerce

- CRM & Automation

- AI Crypto Trading Bots

- AI Stock Trading Bots

- Website & Funnel

- Customer Service

- Free Tools

- AI Chatbots

- ChatGPT plugins

- Tool Reviews

Get 300+ Best AI Tools Now!

We'll send you a free AI Tool List with 300+ of the best AI Tools available. And we continue to update it every week!

Get 300+ Best AI Tools Now!

We'll send you a free AI Tool List with 300+ of the best AI Tools available. And we continue to update it every week!

AI Tool Categories

Get 300+ Best AI Tools Now!

We'll send you a free AI Tool List with 300+ of the best AI Tools available. And we continue to update it every week!

- AI Solutions

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

- Contact

- AI News

Latest AI News

Featured AI News

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

- AI Academy

- ChatGPT Tutorial: How to use ChatGPT for Beginners

- How to Start an AI Automation Agency: A Guide for Beginners

- How To Making Money with AI

- How to Build Chatbots

- How to Write a Prompt: Best ChatGPT Prompts

- Make money with ChatGPT

- 55+ Profitable AI Business Ideas

- How to use AI for Marketing

- Create a Profitable Blog with AI

- How to Jailbreaking ChatGPT

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

- AI Tools

- AI Tools Directory

- AI For Business

- Marketing

- Social Media

- Content Creation

- Copywriting

- SEO

- Video

- Image & Art

- Email Writer & Assistant

- Ecommerce

- CRM & Automation

- AI Crypto Trading Bots

- AI Stock Trading Bots

- Website & Funnel

- Customer Service

- Free Tools

- AI Chatbots

- ChatGPT plugins

- Tool Reviews

Get 300+ Best AI Tools Now!

We'll send you a free AI Tool List with 300+ of the best AI Tools available. And we continue to update it every week!

Get 300+ Best AI Tools Now!

We'll send you a free AI Tool List with 300+ of the best AI Tools available. And we continue to update it every week!

AI Tool Categories

Get 300+ Best AI Tools Now!

We'll send you a free AI Tool List with 300+ of the best AI Tools available. And we continue to update it every week!

- AI Solutions

Get Weekly AI News!

Do as +50,000 others and get a weekly newsletter filled with top rated tools, AI tips, Prompts and weekly news!

- Contact